model <- keras$Model(vgg16$input,

vgg16$get_layer("flatten")$output)

final_conv_pred <- haku |>

keras$layers$Resizing(224L, 224L)() |>

torch$unsqueeze(0L) |>

keras$applications$vgg16$preprocess_input() |>

model$predict()

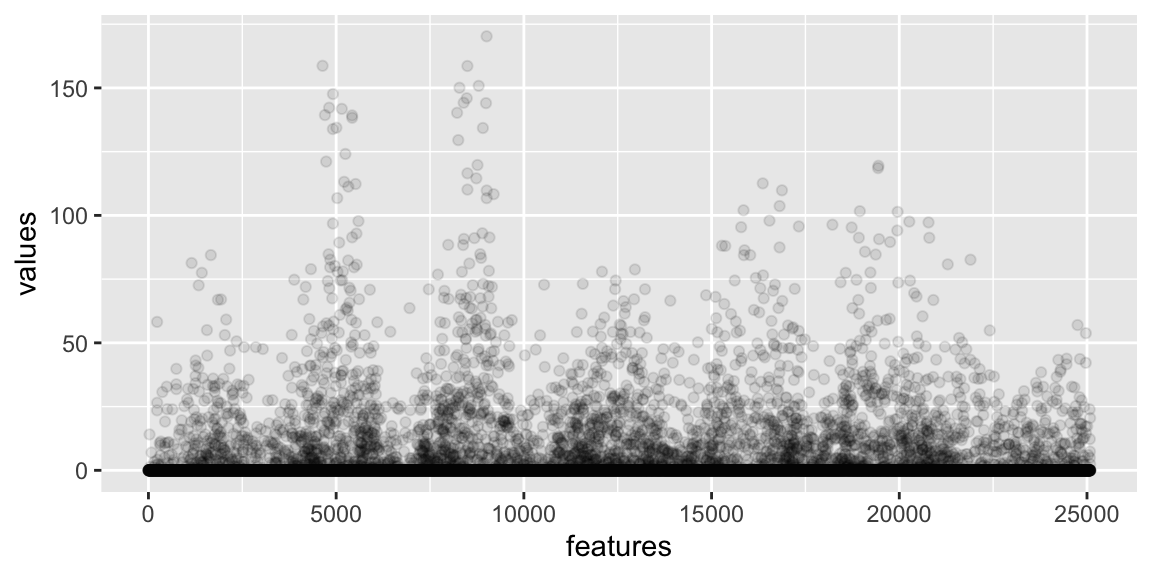

data.frame(features = 1:25088,

values = py_to_r(final_conv_pred[0])) |>

ggplot() +

geom_point(aes(features, values), alpha = 0.1)Deep Learning and Computer Vision in R: A Practical Introduction (3)

BIBC2025 workshop - CV model interpretation

Patrick Li

RSFAS, ANU

Content summary

- Overview of computer vision (CV)

reticulatebasics- Image classification

- Hyperparameter tuning

- > CV model interpretation

- Object detection

- Image segmentation

CV model interpretation

CV model interpretation

Model interpretation in computer vision refers to the set of methods and principles used to understand how a vision model processes visual information and arrives at its predictions.

It remains a developing and often underemphasised area in computer vision, particularly in the era of predictive modelling and deep learning.

CNN model interpretation

CNN models learn simple visual features in their early layers and increasingly complex ones in later layers. Let’s use Haku as an example.

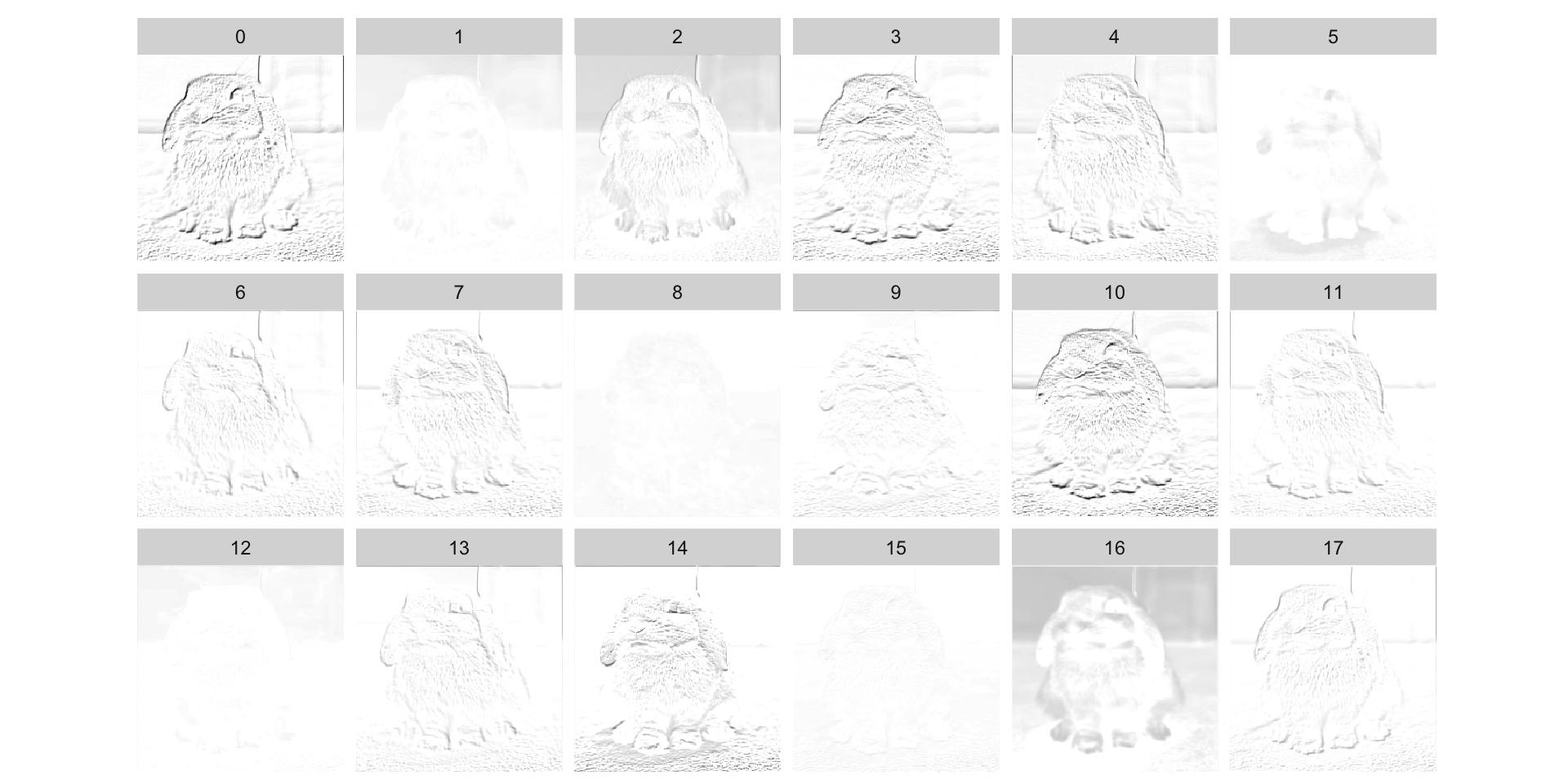

block1_conv1 in VGG16

It detects simple edges, basic color and contrast transitions, and tiny texture elements, nothing semantic, only the most primitive building blocks that deeper layers will recombine into meaningful shapes later.

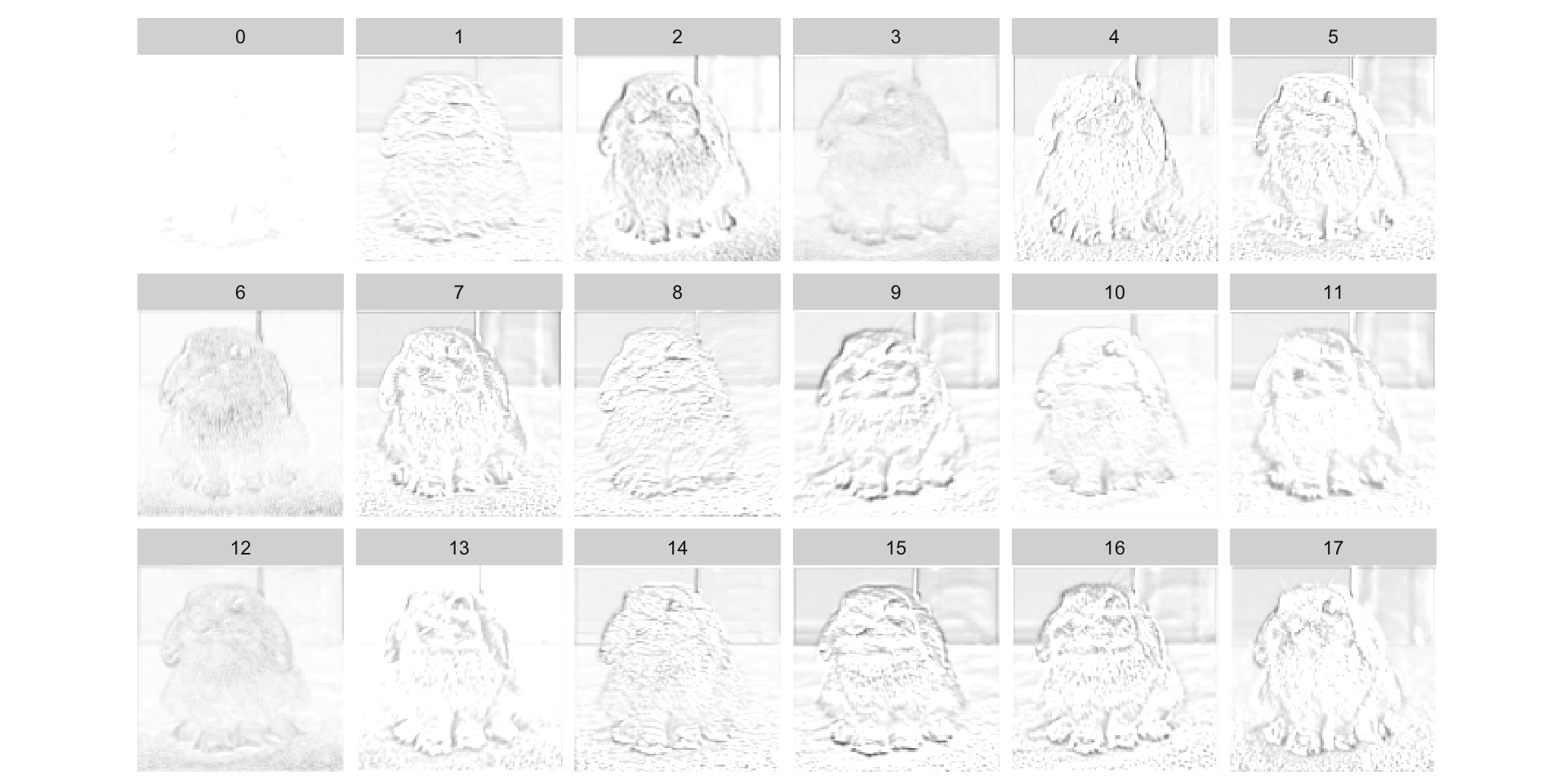

block2_conv1 in VGG16

It detects clusters of edges and coarse textures that outline the stuffed animal’s head, ears, and body fur. Some channels suppress background regions through contrast differences, but still no semantic understanding.

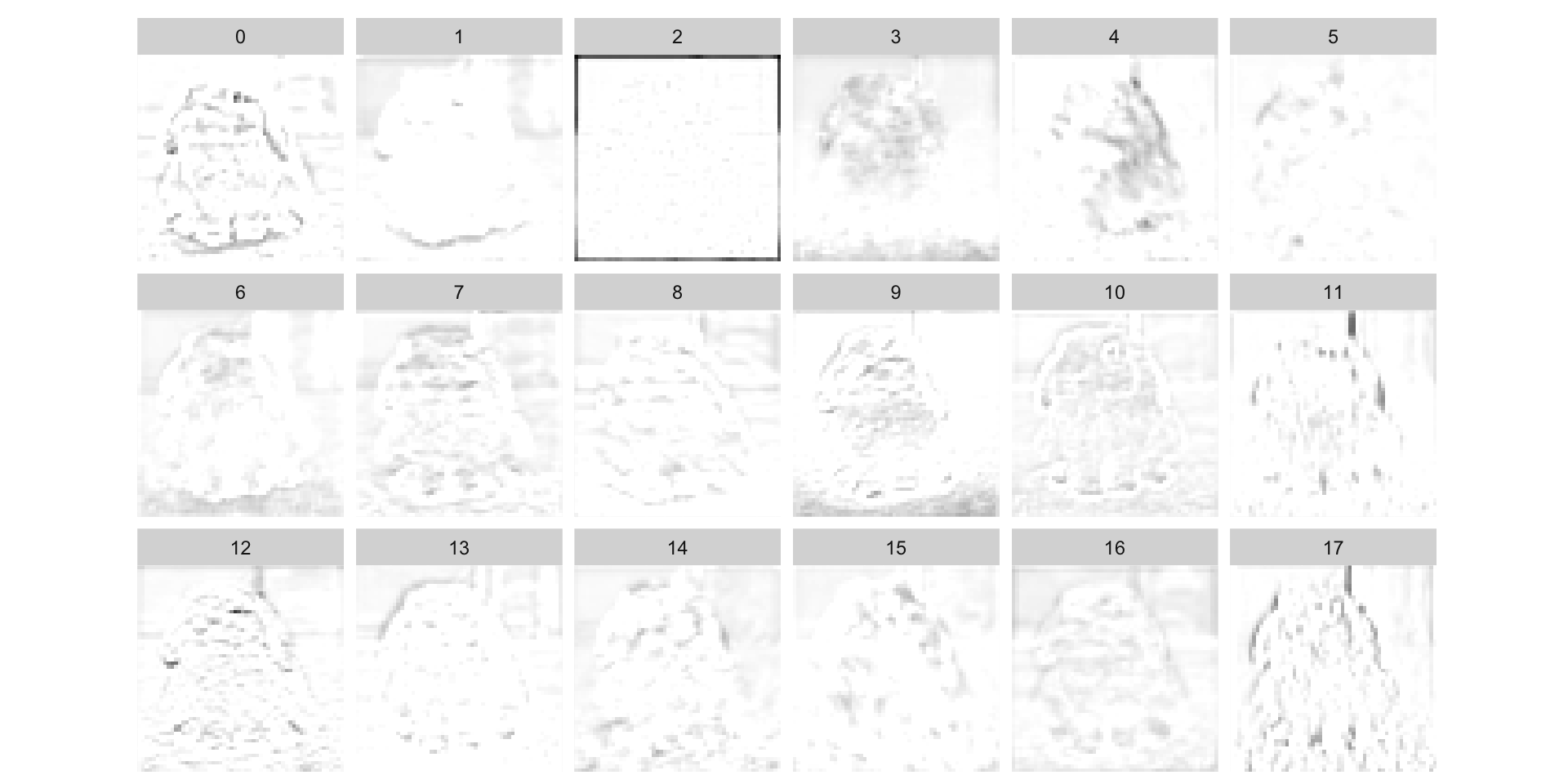

block3_conv1 in VGG16

It detects repeated fur-like textures, local curvature, and blob-level part cues (rough head/torso regions). However, its activations are diffuse and texture-dominated, so the overall object shape becomes blurred and less recognizable.

Embedding space

In CNNs, the embedding space is a high-dimensional vector representation of images learned by the network.

Outputs from the final convolutional block capture hierarchical visual features, mapping similar images close together and dissimilar ones farther apart.

Embeddings can be visualized or used for tasks such as retrieval, classification, and clustering.

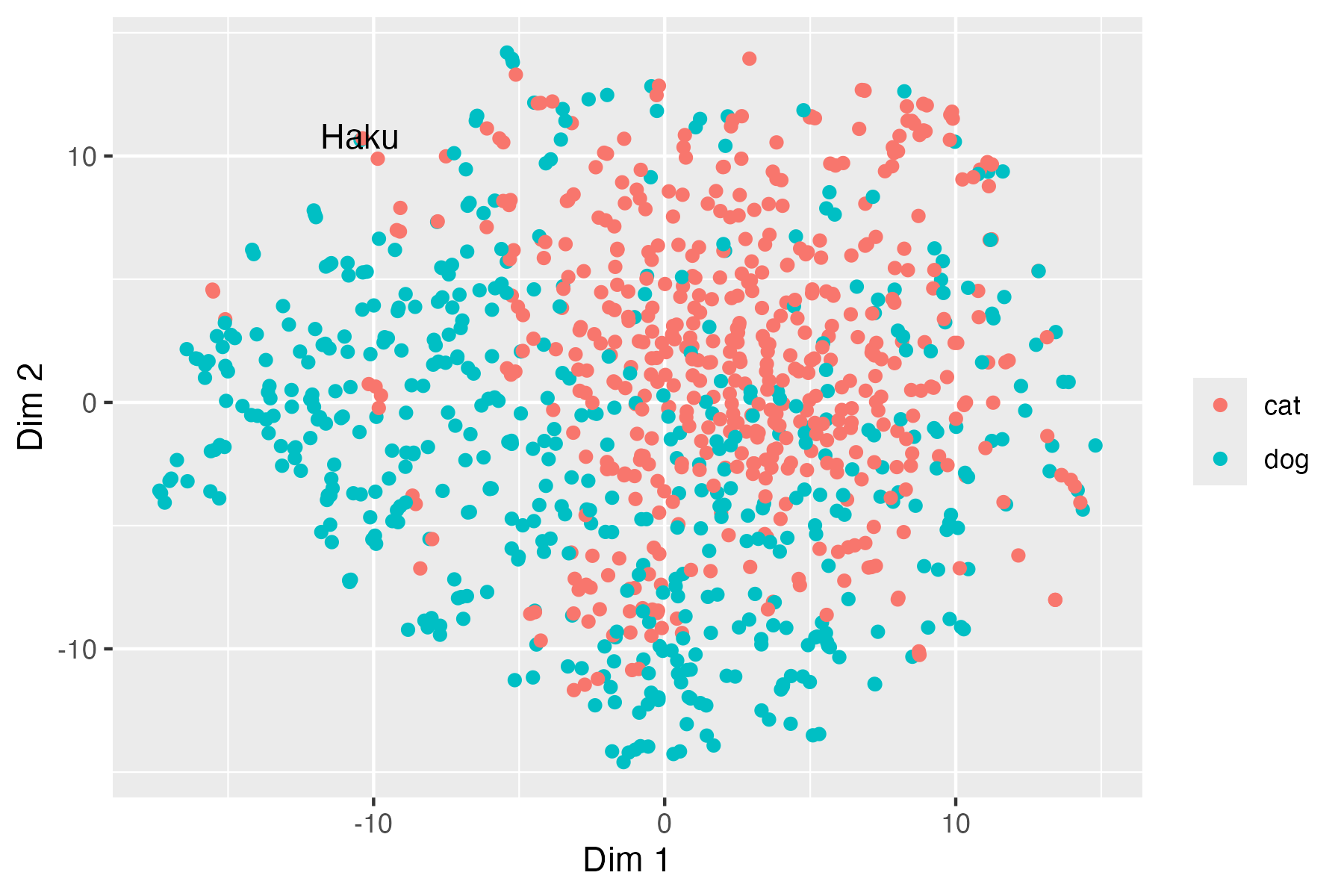

Visualize the embedding with t-SNE

Dimensionality reduction can be used to visualize the embedding space.

Significant Overlap: Cat and dog embeddings mix heavily, indicating weak class separation and substantial sharing of visual features in VGG16’s feature space.

Partial Clustering: Cats tend to group toward the right/upper region.

Haku’s Position: Haku sits at the boundary of both classes, suggesting it is distinct from both classes yet still partially resemble visual aspects of cats and dogs.

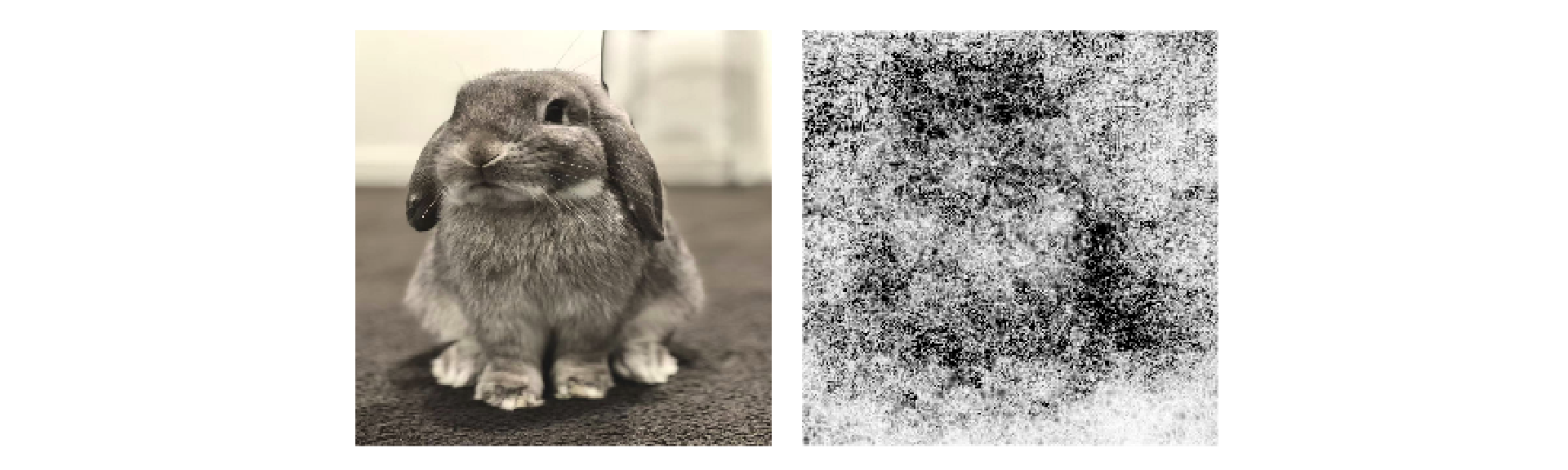

Vanilla gradient

Vanilla gradient gives the raw, unprocessed sensitivity of each input pixel to the model’s output, showing how small pixel changes would affect the class score.

They are often noisy and work best on simpler, task-specific models.

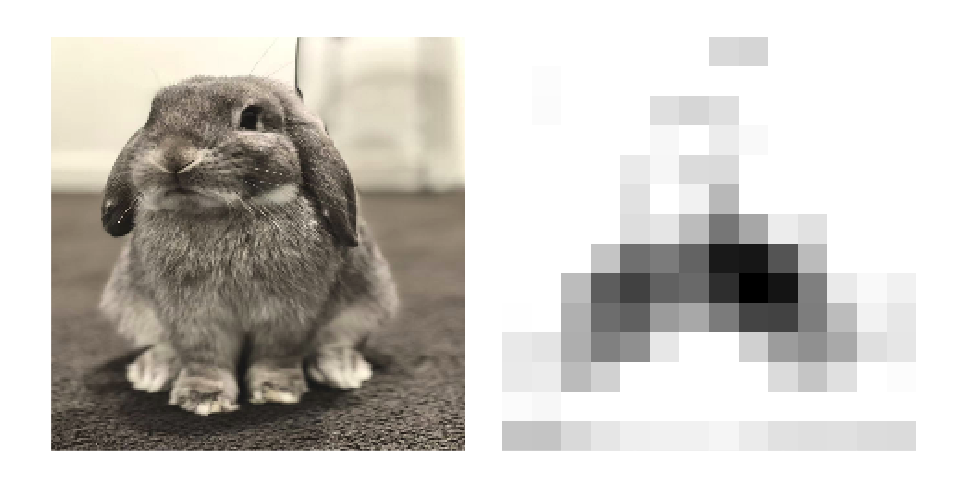

Grad-CAM

Grad-CAM generates a class-specific heatmap by weighting the last convolutional layer’s feature maps using gradients of the target class.

- Target layer: Pick a late convolutional layer with good spatial and semantic info.

- Activations: Record feature maps from the forward pass.

- Gradients: Backpropagate the class score to the feature maps.

- Pooling: Average gradients spatially to get channel weights.

- Combine: Weight feature maps and sum to form the heatmap.

- ReLU: Keep only positive contributions.

- Normalize: Scale to 0–1 and resize to input dimensions.

first_part <- keras$Model(vgg16$input,

vgg16$get_layer("block5_conv3")$output)

second_part <- keras$Model(vgg16$get_layer("block5_pool")$input,

vgg16$output)

resized <- haku |>

keras$layers$Resizing(224L, 224L)() |>

torch$unsqueeze(0L)

invisible(resized$requires_grad_())

first_pred <- resized |>

keras$applications$vgg16$preprocess_input() |>

first_part()

second_pred <- first_pred |>

second_part()

grad <- torch$autograd$grad(second_pred[0][330], first_pred)[0][0]

positive_map <- (grad$mean(dim = tuple(0L, 1L))$view(1L, 1L, 512L) * first_pred[0])$sum(dim = 2L) |>

keras$layers$ReLU()()

positive_map <- (positive_map - positive_map$min()) / (positive_map$max() - positive_map$min())

positive_map <- 1 - positive_map

p1 <- resized[0]$detach()$cpu()$numpy() |>

plot_rgb(axis = FALSE)

p2 <- positive_map$unsqueeze(-1L)$`repeat`(1L, 1L, 3L)$detach()$cpu()$numpy() |>

plot_rgb(max_value = 1, axis = FALSE)

patchwork::wrap_plots(p1, p2, ncol = 1)Other techniques in image classification

Ensemble methods

Model Averaging / Voting: Combine predictions from multiple CNNs (e.g., ResNet, VGG) for more stable outputs.

Snapshot Ensembles: Use snapshots of a single CNN during training to form an ensemble.

Stacking: Train a meta-CNN to integrate outputs of several base CNNs.

Self-supervised learning

Self-supervised learning learns meaningful representations from unlabeled data using pretext tasks and fine-tunes them for downstream tasks.

- No labels required during pretraining.

- Pretext tasks teach the network useful features (e.g., predicting missing image patches, rotations, or contrastive similarity). We then fine-tune the learned representations for classification.

\overset{\text{Predict}}{\Longrightarrow}

Multimodal models

Multimodal models jointly learn from multiple data types.

Modern multimodal models are dominated by ViT-based architectures because transformers naturally handle multiple modalities through token fusion.

CLIP-style contrastive learning: Uses separate image and text encoders to map both modalities into a shared embedding space, with a contrastive loss pulling matched pairs together and pushing mismatched pairs apart.

- Zero-shot: Classify a new image by comparing its embedding with text prompts and choosing the highest-similarity one.

Vision-Language Transformers (e.g., BLIP, LLaVA): Unified models that jointly process image and text tokens.

Multimodal Pretraining: Large-scale training on image–text datasets (e.g., LAION).

Model compression

Model compression reduces model size and computation while preserving accuracy, leading to faster inference and lower memory usage.

Pruning: Remove redundant weights or neurons to create a smaller, sparser network.

Quantization: Reduce the precision of weights/activations (e.g., float32 \rightarrow float8).

Knowledge Distillation: Train a compact student model to mimic the predictions or representations of a larger teacher model.

Exercises 3

Select the best-performing NN and CNN models we have trained so far. Then, for training image No.2, visualize their raw gradients. In addition, generate a Grad-CAM visualization for the CNN model.

Slides URL: https://ibsar-cv-workshop.patrickli.org/ | Canberra time